Today, we live in a world where breaking news are almost instantly captured by the crowd and shared on social networks such as Twitter, Instagram or TikTok. Yet, media companies are currently unable to effectively extract meaningful information from the deluge of visual data uploaded online every second. The VITA laboratory is developing such technologies that were used, for example, in 2019 to estimate the size of the crowd during the 14 June women’s strike in Geneva, using images and videos captured by demonstrators. The resulting article, published by Heidi.news, even questioned the official estimates.

In this project, the VITA laboratory, in partnership with RTS, aims to develop computationally efficient methods that will go beyond crowd counting, and extract a large set of semantics ranging from a list of objects to human actions and their relationships. The proposed technology will allow complex metadata to be automatically extracted from any image or video. By working in real time on live or crowdsourced content, this information will enable journalists to enhance the quality of their coverage, and to produce new and innovative formats. Content recommendation or content retrieval systems will also greatly benefit from the enriched metadata.

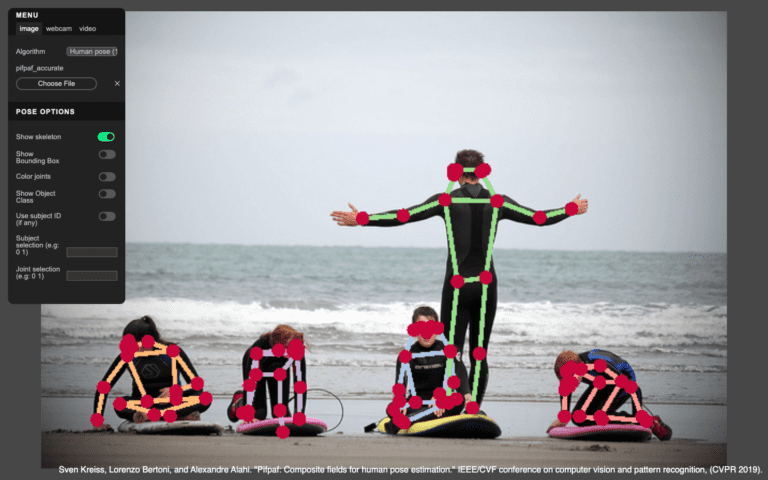

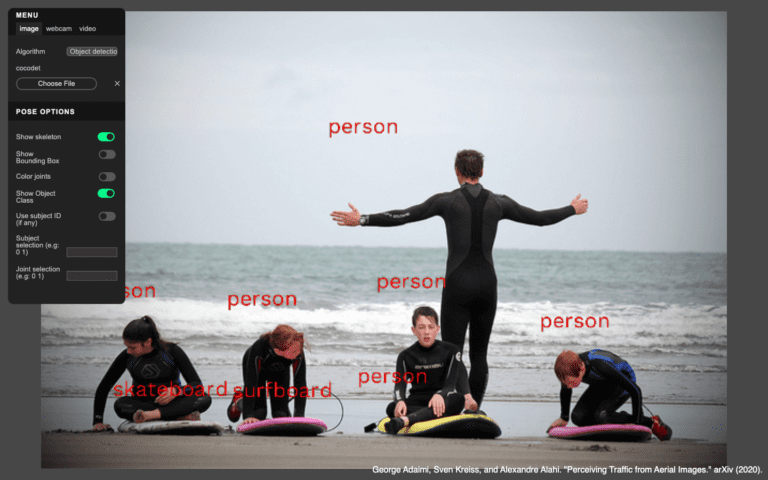

Now, the VITA laboratory has set up a web platform called Movements that allows scientists and engineers to test all newly developed detection methods on images, videos and even in real time using a webcam.

Project presentations

- «

- »

Watch on YouTube